Welcome to the fourth part of a series on Docker and Kubernetes networking. Similar to iptables: How Kubernetes Services Direct Traffic to Pods, we’ll focus on how kube-proxy uses IPVS (and ipset) in IPVS mode. Like the previous posts, we won’t use tools like Docker or Kubernetes but instead use the underlying technologies to learn how Kubernetes work.

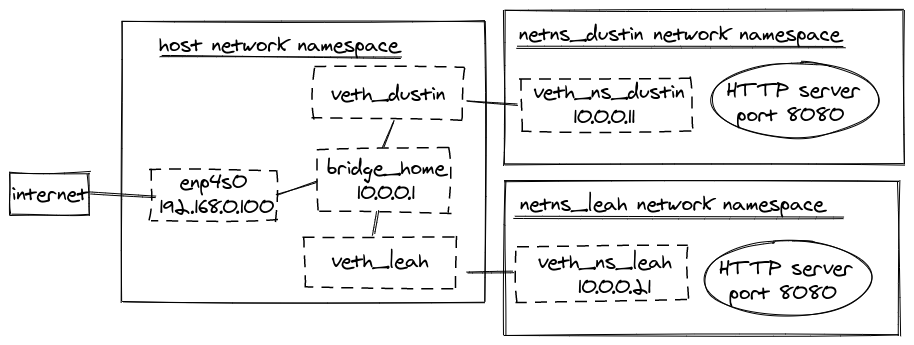

For example, recall that Kubernetes creates a network namespace for each pod. We’ll create a similar environment by manually creating network namespaces and starting Python HTTP servers to act as our “pods.”

This post aims to mimic what kube-proxy would do for the following service using IPVS and ipset.

|

|

By the end of this post, we’ll be able to run curl 10.100.100.100:8080 to have our request directed to an HTTP server in one of

two network namespaces (or “pods”).

I recommend reading the previous posts first, especially #3. This post follows the same outline but using IPVS instead of iptables.

- How do Kubernetes and Docker create IP Addresses?!

- iptables: How Docker Publishes Ports

- iptables: How Kubernetes Services Direct Traffic to Pods

Note: This post only works on Linux. I’m using Ubuntu 20.04, but this should work on other Linux distributions.

Why IPVS over iptables?

This post isn’t trying to sway anyone on IPVS or iptables, but only on how kube-proxy uses IPVS.

I recommended reading IPVS-Based In-Cluster Load Balancing Deep Dive to overview the performance benefits of ipsets and IPVS and the advanced scheduling options IPVS supports.

Create virtual devices and start HTTP servers in network namespaces

We’re going to create an environment as we did in iptables: How Kubernetes Services Direct Traffic to Pods. I’m not going to explain this time, but please refer to the previous post for more information.

|

|

In another terminal run:

|

|

Open another terminal and run:

|

|

Verify the following commands all succeed:

|

|

Our environment once again looks like this:

Install required tools

To use IPVS and, later, ipset, we’ll need to install two tools, ipvsadm and ipset.

On Ubuntu, we can install these by running:

|

|

Note: at the time of writing this, I’m using

ipset 7.5-1~exp1and ipvsadm1:1.31-1.

Now, we’re ready to start learning the new parts!

Create a virtual service via IPVS

We’ll start using IPVS by first creating a virtual service:

|

|

Note: I recommend running

sudo ipvsadm --list --numericafter runningipvsadmcommands to see the impact.

Notice that we specify a tcp-service since the TCP protocol is desired based on the

introduction’s service YAML.

A neat bonus for IPVS is the ease of selecting a scheduler. In this case, rr for round-robin is chosen,

which is the default scheduler kube-proxy uses.

Note: kube-proxy as of today only allows picking a single scheduler type to use for every service. kube-proxy will support each service specifying its scheduler someday, as mentioned in IPVS-Based In-Cluster Load Balancing Deep Dive!

We now need to give our virtual service a destination. We’ll start by sending traffic to the HTTP server running in the

netns_dustin network namespace.

|

|

This command instructs IPVS to direct TCP requests for 10.100.100.100:8080 to 10.0.0.11:8080. It’s

important to specify --masquerading here, as this effectively handles NAT for us as we previously

did in iptables: How Kubernetes Services Direct Traffic to Pods. By not specifying --masquerading,

IPVS attempts to use routing to direct the traffic, which will fail.

Now, run the following:

|

|

We successfully used IPVS!

Enable a network namespace to communicate with the virtual service

Try running the following:

|

|

and unfortunately, this doesn’t work.

To get this to work, we can assign the 10.100.100.100 IP address to a virtual device.

I do not understand why the IP address needs to be assigned. If you know, please let me know! I only know that this works, and it’s what Kubernetes does today.

I initially assumed having this IP address as a virtual service would have been enough. In iptables: How Kubernetes Services Direct Traffic to Pods, we instruct bridges to call iptables, and iptables has a rule for

10.100.100.100. I’m speculating the gap is that bridges don’t call IPVS, but assigning the IP address to a virtual device allows enabling routing via IPVS to work correctly.

We don’t need to attach the IP address to any device in particular, so we’ll do what Kubernetes does. Kubernetes creates a virtual device that is a dummy type.

|

|

So now we’ve used veth, bridge, and dummy types.

Attach the IP address to our dustin-ipvs0 device by running:

|

|

Note: we can

ping 10.100.100.100. Not sure how this is useful, but it didn’t work with the iptables solution.

Finally, we’ll need to enable forwarding traffic from one device to another, so like we did in the previous post, run:

|

|

And check that the following works:

|

|

Making progress!

Enable hairpin connections

Let’s try running:

|

|

This command will fail. No worries, we learned about hairpin and promiscuous mode iptables: How Kubernetes Services Direct Traffic to Pods, so we can fix this!

Enable promiscuous mode by running:

|

|

And let’s try running

|

|

and this fails, much to my surprise. Learning IPVS wasn’t too bad, and then I spent an afternoon trying to figure out why enabling promiscuous mode didn’t solve this problem.

It turns out hairpin/masquerade doesn’t work for IPVS without enabling the following setting:

|

|

We can then run:

|

|

Success! I don’t quite understand how enabling conntrack fixes the issue, but it sounds like this setting also handles supporting masquerade traffic for IPVS.

Improve masquerade usage

So during our environment setup and the previous posts, we had run:

|

|

This rule masquerades all traffic coming from 10.0.0.0/24. Kubernetes does not do this by default.

Kubernetes tries to be precise about which traffic it needs masquerade for performance reasons.

We can start by deleting the rule via:

|

|

We can then be precise and add the following rule for the netns_dustin network namespaces:

|

|

This rule works, and the previous curl commands continue to work too. We’ll have to add a similar rule for each network

namespace, such as netns_leah. But wait, one of the main advantages of IPVS was to prevent

having a lot of iptables rules. With all of these masquerade rules, we’re going to balloon up iptables again.

Fortunately, there’s another tool we can use, ipset. kube-proxy also leverages ipset when in IPVS mode.

Let’s start by cleaning up the iptables rule we just created:

|

|

To begin using ipset, we’ll first create a set.

|

|

Note: I recommend running

sudo ipset listto see the changes after running ipset commands.

This command creates a set named DUSTIN-LOOP-BACK that is a hashmap that stores destination IP, destination port, and source IP.

We’ll create an entry for the netns_dustin network namespace:

|

|

This entry matches the behavior when we make a hairpin connection (a request from netns_dustin to 10.100.100.100:8080 is sent back to 10.0.0.11:8080 [netns_dustin]).

Now, we’ll add a single rule to iptables to masquerade traffic when the request matches this ipset:

|

|

Once again, the following curl commands all work:

|

|

ipset is not specific to IPVS. We can leverage ipset with just iptables and some CNI plugins like Calico do!

Add another server to the virtual service

Now let’s add the netns_leah network namespace as a destination for our virtual service:

|

|

And add 10.0.0.21 as an entry to DUSTIN-LOOP-BACK ipset so that the hairpin connection works for the netns_leah network namespace.

|

|

Remember how many chains we had to account for when using iptables? And we had to take caution of the order of rules and the probability of each.

Try running the following command a few times to see the round-robin scheduler in effect:

|

|

Future research

After exploring IPVS, I can understand the desire to use IPVS even in smaller clusters for the scheduler settings alone. ipsets is also super cool and by itself helps with kube-proxy performance issues found in massive clusters.

In the future, I’d like to learn more about

- conntrack

- how some CNIs use virtual tunnels instead of host routing

- how some CNIs use BGP peering

- I talk about using BGP and BIRD to do this in Kubernetes Networking from Scratch: Using BGP and BIRD to Advertise Pod Routes.

Have any info on these topics or any questions/comments on this post? Please feel free to connect on LinkedIn, or GitHub.

And if you enjoyed this post, consider signing up for my newsletter to know when I publish new posts.